Learn how to leverage the potential of Gemini 2.0 Flash, both in its web version and via its mobile application.

AI tools can become valuable professional and personal allies in our daily lives. However, to maximize their benefits, it’s essential to understand how to use them. We want to explain how to use Gemini 2.0 Flash, Google’s multimodal and generative conversational AI with improved performance and speed.

Gemini 2.0 Flash is ideal for facilitating everyday tasks such as writing texts, generating ideas, assisted learning, creating images, and more. Furthermore, its multimodal capabilities, which allow it to process text, images, audio, video, and code, make it an extremely effective tool.

Gemini 2.0 Flash can be used via its web version or dedicated mobile app. It’s worth noting that Google has just launched its model, which allows any user to access this AI from their browser without needing to log in with a Google account.

How to use Gemini 2.0 Flash from the web and the app

Using Google AI is very simple and works similarly to other models like ChatGPT. First, you must access their platform or transfer their app (available for Android and iOS) and register.

Once you’ve done this, you’ll be redirected to Gemini’s main dashboard. On the web version, you’ll see the space to interact with the chatbot and, on the left, a sidebar where you can manage the tool, access previous conversations, and start new ones.

On the mobile version, the main view will display the interaction panel. To resume previous conversations, you’ll need to click the message icon in the screen’s top left corner.

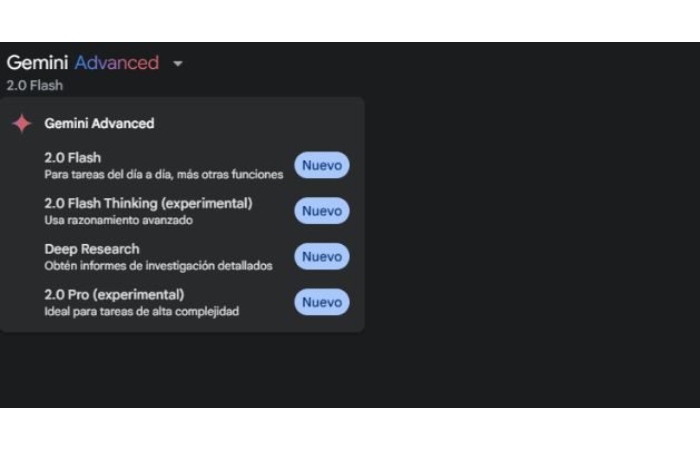

Gemini allows you to change the AI model you’re interacting with. To do this, you’ll find a drop-down model selector in the web version’s top left corner and in the app’s top centre. The name of the active version will appear below this feature.

In this case, we’re interested in selecting the Gemini 2.0 Flash option. However, you should subscribe to the paid Gemini Advanced plan. In that case, other versions, such as the experimental Gemini 2.0 Flash Thinking model, the experimental Gemini 2.0 Pro, and Deep Research for advanced research, are available.

With the Gemini 2.0 Flash model active, you can start taking full advantage of this AI. Let’s look at some use cases!

How to Make a Flash Query in Gemini 2.0

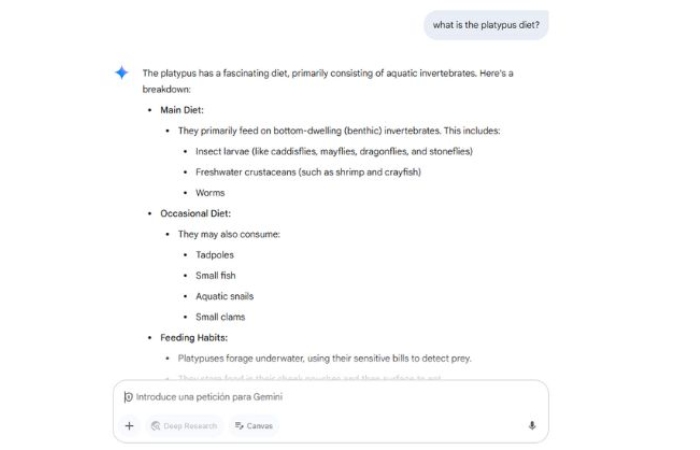

Suppose you need quick and comprehensive answers without wading through articles online. In that case, you can send Gemini a text message (or explain your question vocally using the microphone), and the AI will condense and organize the content to provide an answer that addresses your concerns.

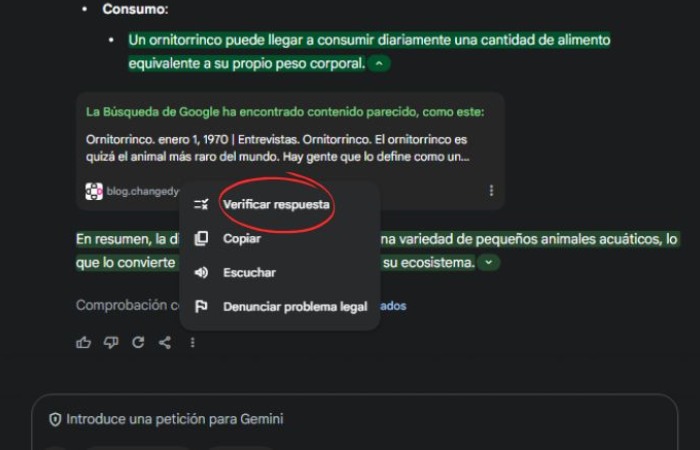

Suppose you want to verify the accuracy of this tool’s answers. You can select the “Verify Answer” function by opening the options using the three-dot icon below the Gemini answer. This way, the AI will show you the sources that validate the data provided in your answer.

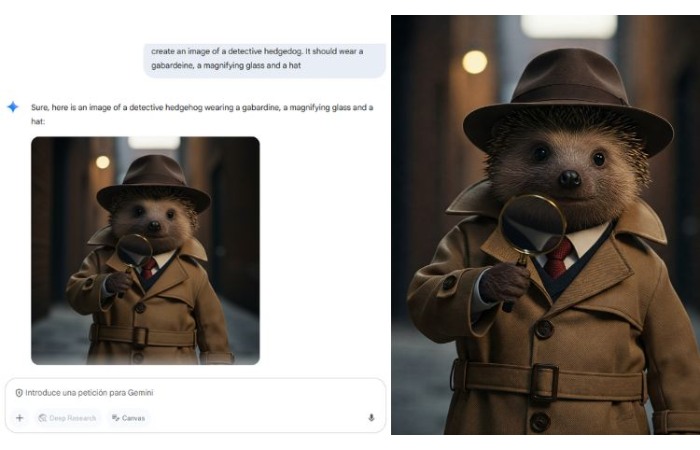

How to Create Images

Gemini 2.0 Flash leverages the power of Image 3, Google’s most powerful image-generating AI, to generate detailed, high-quality images. Please enter a description of the image you want to create (the more detailed it is, the better the AI will adapt to your needs), and Gemini 2.0 Flash will make it in a few seconds.

How to plan your vacation

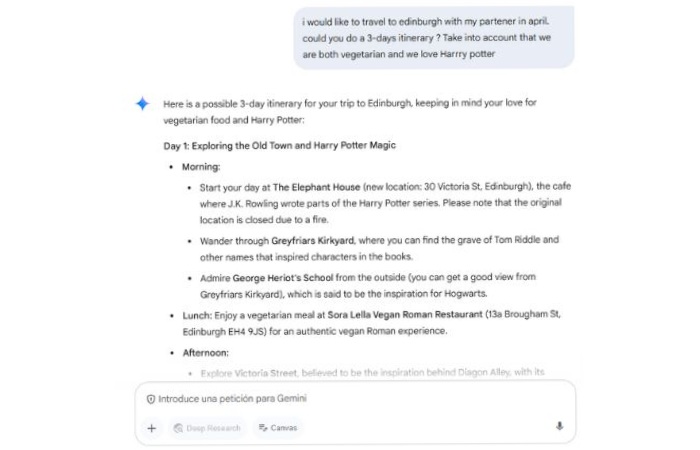

Generative AI is also a great option to help you plan your trips and adventures. In this case, I asked Gemini 2.0 Flash to design the ideal itinerary for Florence, considering the specific conditions and my personal preferences: “I’d like to travel to Edinburgh with my partner in April. Could you suggest a three-day itinerary? Remember that we’re both vegetarians and love Harry Potter.”

How to gather information for your purchases

If, for example, you’re considering a new phone and need to conduct market research to determine which options best suit your needs, Gemini can be a great help.

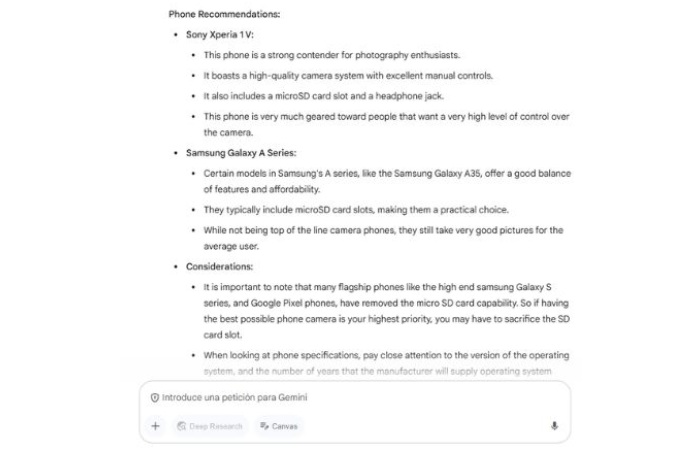

In response to the question, “I would like to buy a new cell phone with a good camera, sufficient memory, and the ability to insert an SD card. What are the best options on the market?” the tool offered me a list of phones from different ranges, along with links to their websites or a Google search.

After receiving the initial response, I asked them to compare the cell phone models they had just recommended. His response wasn’t entirely satisfactory, as he fashioned a list of pros and cons for each model but didn’t indicate the best option.

Finally, he stated, “I recommend consulting detailed reviews and comparisons online to get a more complete picture of each model and decide which one best suits your needs and budget.”

Use Gemini 2.0 Flash on the web without logging in

As we mentioned at the beginning of this article, you no longer need to log in with a Google account to use Gemini in the browser. Google has removed this requirement, as did OpenAI at the time. This is a way to make your AI more accessible to more people, thus generating interest in this tool.

However, this option has several significant limitations that, while allowing users to take advantage of some of its potential, aim to discourage them from signing up for Gemini. You won’t be able to upload files to work with or access your chat history, as your conversations won’t be saved without an account.

Furthermore, Gemini 2.0 Flash is the default model for this version, featuring powerful and efficient AI. However, you must register to use Gemini 2.0 Thinking or Deep Research.

FAQS

Google’s Gemini 2.0 Flash is an advanced AI model that optimizes user interactions through multimodal capabilities and enhanced reasoning. Below are some frequently asked questions about Gemini 2.0 Flash:

What is Gemini 2.0 Flash?

Gemini 2.0 Flash is part of Google’s Gemini family of AI models and offers improved performance for everyday tasks. It supports multimodal input, allowing it to process text and images, and includes a context window of 1 million tokens to handle large amounts of data. This model is designed to provide real-time responses and integrates seamlessly with various applications.

What are the key structures of Gemini 2.0 Flash?

Multimodal input: Processes both text and images, enabling more dynamic interactions.

Native Tooling: Use built-in tools to enhance functionality, such as code execution and function calls.

Expanded Context Window: Manages up to 1 million tokens, enabling more comprehensive data processing. Live Multimodal API: Supports low-latency, two-way voice and video interactions, facilitating real-time communication.

How can developers access Gemini 2.0 Flash?

Developers can access Gemini 2.0 Flash through the Gemini API, which is available in Google AI Studio and Vertex AI. The model supports multimodal text input and output, with additional features such as text-to-speech and native image generation available to partners in early access.

What is the Gemini 2.0 Flash Thinking Model?

The Gemini 2.0 Flash Thinking Model is an experimental variant trained to articulate its reasoning process in responses. This enhancement provides more robust reasoning capabilities than the base Gemini 2.0 Flash model.

What are the differences between the Gemini 2.0 Flash, Flash-Lite, and Pro models?

Flash-Lite: Optimized for cost-effectiveness and speed, ideal for large-scale text output use cases.

Pros: It offers advanced features, including a 2 million token context window and increased coding fluency, ideal for complex tasks requiring extensive context.

How does Gemini 2.0 Flash handle function calls?

Gemini 2.0 Flash supports function calls using OpenAPI-compliant JSON schemas and Python function definitions using docstrings. This feature allows developers to describe functions that the model can invoke, enabling more dynamic and interactive applications.

What are the prices for Gemini 2.0 Flash? Specific pricing information for Gemini 2.0 Flash is available on Google’s official pricing page. We recommend consulting this resource for the most up-to-date and detailed pricing information.